- Published on

Exploring H2O.ai AutoML

- Authors

- Name

- Varun Kruthiventi

- @waxsum8

The short time I have spent on Kaggle, I have realized ensembling (stacking models) is the best way to perform well.

Well, I am not the only one to think so !!

Stacking is a Model Ensembling technique that combines predictions from multiple models and generates a new model.

I am gonna write a new post on model ensembling 🙂

I have experimented with multiple ensembling techniques and made a model with XGboost, LightGBM, and Keras for Zillow Zestimate problem which did perform well.

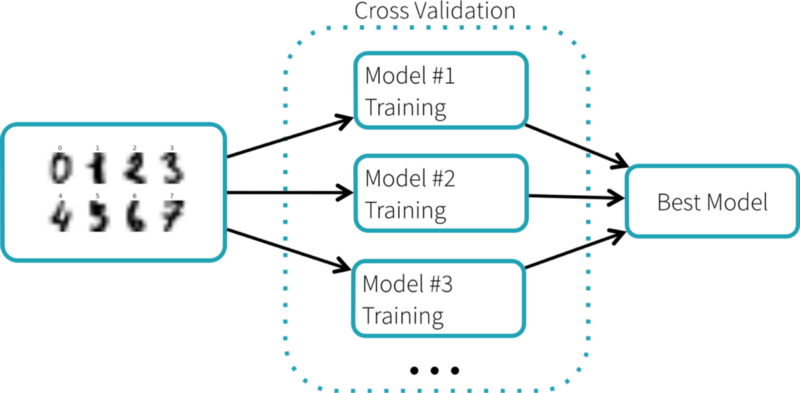

Hyper-Parameter tuning for the base models was done using Cross-Validation + Grid Search. Tuning the parameters of the combined model is where things get strenuous.

There, I began to search for a better way to build ensembled models. I found few frameworks to build better-ensembled models like Auto-sklearn, TPOT, Auto-Weka, machineJS and H2O.ai AutoML.

Auto-sklearn and TPOT provide a Sklearn styled API that can help you get things going quite fast. But H2O.ai Auto ML got better results for me atleast 🙂

H2O.ai is an open source Machine Learning platform which gives you a good bunch of Machine Learning algorithms to build scalable prediction models.

H20 AutoML can help in automating the machine learning workflow, which includes training and tuning of hyper-parameters of models. The AutoML process can be controlled by specifying a time-limit or defining a performance metric-based stopping criteria. AutoML returns a leaderboard with the best models ensembled.

AutoML provides APIs in Python and R that comes with H2O library.

I have decided to give a try on H20 AutoML for Zillow Zestimate problem. I have used R for making the model for making the submission.

Running the AutoML model for 1800 seconds with stopping metric as MAE gave me a Public Leaderboard score of 0.06564.

That’s a good score considering that I haven’t even dealt with basic data preprocessing 🙂